Digital Signage AI Primer

Guest writeup on Sixteen-Nine.net — read the full post. The original post is on Intuiface website

Excerpt:

Introduction

The world of generative AI is on fire. Super-powered algorithms are writing code, crafting stories, and creating images that would challenge a Turing test. Under the covers, deeply complex machine-learning processes are burrowing through billions of human-created words, graphics, and code, getting more intelligent and more creative by the minute.

And since these algorithms are fully accessible via Web API, they are easily incorporated into your Intuiface experiences.

Let’s spend some time understanding the world of generative AI their value for digital signage, and how you can use them in Intuiface

What is Generative AI?

Generative Artificial Intelligence (AI) is a subset of machine learning that enables computers to create new content – such as text, audio, video, images, or code – using the knowledge of previously created content. The output is authentic-looking and completely original.

The algorithms creating this unique content are based on models that reflect lessons learned about a particular topic. These lessons are not programmed; instead, the algorithms teach themselves via a mechanism known as deep learning, refining their models as more and more data about a topic comes in. Among the many fascinating aspects of this technology is the flexibility of the learning engine, which is adaptable to all aspects of human expression. Both the aesthetics of an image and the formalism of JavaScript code are achievable!

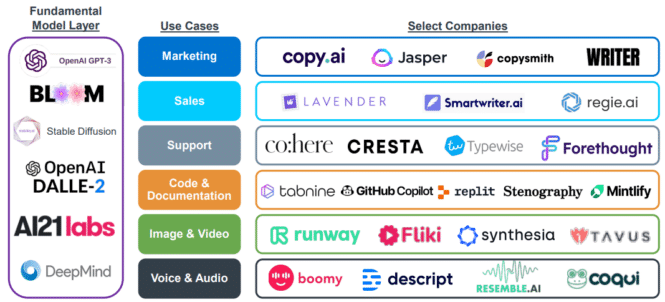

The most well-known example of generative AI is GPT – currently known as GPT-3.5, the latest evolution of the third-generation language prediction model in the GPT series. Created by OpenAI, it’s an algorithm that can be adapted to create images and anything with a language structure. It answers questions, writes essays, develops summaries of longer text items, writes software code, and even translates languages.

To achieve this ability, OpenAI provided the GPT model with around 570GB of text information from the internet. Want to try it out? Head to ChatGPT, create a free account, and start a conversation.

For image generation, the best-known options are DALL·E (based on GPT), Midjourney, and Stable Diffusion. Like ChatGPT, these services take natural language as input, but their output is images. The output can be in any requested style – from art-inspired themes like cubism or impressionism to completely realistic images that look like photographs but were created by an algorithm. Now you can indulge your desire to see “Yoda seated on the Iron Throne from “Game of Thrones” at home plate in Fenway Park.” (We used Stable Diffusion to generate the image below with that exact text.)

How traditional digital signage can take advantage of Generative AI

Generative AI can be an excellent companion technology for creating unique and engaging digital signage experiences. With it, digital signage can dynamically create and display real-time content that perfectly fits the context. This content can be influenced by user behavior or external data sources, from weather forecasts to real-time prices.

Examples include:

- Create context-sensitive images that reflect the current information, environment, or audience.

- Generate summaries and/or translations of unpredictable text like news reports or sports events.

- Rewrite messages with different tones and lengths based on the audience or urgency.

The most significant hurdle is performance, particularly for image generation, as today’s Generative AI solutions are not (yet) instantaneous. Depending on the complexity of the request and the complexity of the desired result, image generation can even take a few seconds. As a result, signage must be proactive in the content request to ensure there is no visual latency.

How interactive digital signage increases Generative AI value

By adopting interactive digital signage, which provides insight into the user’s preferences, you can go further with Generative AI. Now you are not just limited to an external context; you have intimate knowledge of your audience and can communicate accordingly.

By “interactive” we mean any type of human-machine conversation, both active and passive. Active options include touch, gesture, and voice, while passive options include sensors and computer vision. For all modalities, in combination with context and on-screen content, digital signage can clearly identify a user’s interests.

Examples include:

- Using user data to craft personalized “avatars” for the length of their session.

- Add quirky personality to interaction, creating jokes and witty asides for the user in the context of what could be an otherwise boring digital engagement.

- Converting a review of shopping cart orders into conversational text to humanize kiosk usage.

- Use anonymous facial recognition technology to identify age/gender and use that information to customize communication.

- Translate ever-changing data sources, like a product catalog or tourist information.

For any natural language scenario, the designer – or the user – could choose to dynamically transform the text to speech (TTS) using either OS-specific services or with the help of generative AI voice services like VALL-E.

In all cases, the creative team is freed from having to anticipate the broad range of potential users/scenarios/requirements. They can just rely on a Generative AI resource to do the heavy lifting in real time.

About The Guest Writer

Geoff Bessin is Chief Evangelist at Intuiface, which means he thinks about the intersection of digital interactivity with signage and presentations. Twitter – @geoffbessin